Table of contents:

It contains a set of all the structures mentioned above except for the sitemap which isn't mandatory.

The rules are addressed to all bots. For example, the "environment" directory is blocked but web crawlers are allowed to enter the "/environment/cache/images/" path. Moreover, the search engine isn’t able to access the shopping cart, login page, copies of the content (index, full), or the internal search engine and comments section.

It contains a set of all the structures mentioned above except for the sitemap which isn't mandatory.

The rules are addressed to all bots. For example, the "environment" directory is blocked but web crawlers are allowed to enter the "/environment/cache/images/" path. Moreover, the search engine isn’t able to access the shopping cart, login page, copies of the content (index, full), or the internal search engine and comments section.

Source: https://www.google.com/webmasters/tools/robots-testing-tool[/caption]

With the use of this tool, you can efficiently check whether particular elements on your website are visible to the robots. For example, they won't be able to access the /wp/wp-admin/test.php address due to the imposed restrictions marked in red.

You can inform Google that your robots.txt file has been updated by using the "submit" option and ask it to re-crawl your site.

Source: https://www.google.com/webmasters/tools/robots-testing-tool[/caption]

With the use of this tool, you can efficiently check whether particular elements on your website are visible to the robots. For example, they won't be able to access the /wp/wp-admin/test.php address due to the imposed restrictions marked in red.

You can inform Google that your robots.txt file has been updated by using the "submit" option and ask it to re-crawl your site.

- Robots.txt – What Is It?

- Elements That Shouldn’t Be Crawled

- Robots.txt Files Are Only Recommendations

- Robots.txt Generators – How to Create the File?

- Directives for the Robots.txt Files

- Where to Place the Robots.txt File?

- Robots.txt File - The Takeaway

Robots.txt - What Is It?

The robots.txt file is one of the elements used for communication with web crawlers. Robots search for this particular file right after entering a website. It consists of a combination of commands that comply with the Robots Exclusion Protocol standard - a "language" understood by bots. Thanks to it, website owners are able to navigate robots and limit their access to resources such as graphics, styles, scripts, or specific subpages on the website that don’t need to be shown in the search results.Elements That Shouldn’t Be Crawled

It’s been ages since websites stopped being simple files that contain nothing apart from texts. Most online stores include numerous subpages that aren’t valuable in terms of the search results or even lead to the creation of internal duplicate content.

To learn more check our article on duplicate content

Robots shouldn’t have access to elements like shopping carts, internal search engines, order procedures, or user panels.

Why?

Because the design of these elements can not only cause unnecessary confusion but also negatively affect the visibility of the site in SERPs. You should also consider blocking copies of subpages made by CMSes as they can increase your internal duplicate content.

Be Careful!

Creating rules that allow you to navigate web crawlers requires perfect knowledge of the website structure. Using an incorrect command can prevent Google robots from accessing the whole website content or its important parts. This, in turn, can lead to counterproductive effects - your site may completely disappear from the search results.

Robots.txt Files Are Only Recommendations

Web crawlers may decide to follow your suggestions, however, for many reasons you can’t force them to observe any commands placed in the abovementioned communication protocol. First of all, Googlebot isn’t the only robot scanning websites. Although creators of the world’s leading search engine ensure that their crawlers respect the recommendations of website owners, other bots aren’t necessarily so helpful. Moreover, a given URL can also be crawled when another indexed website links to it. Depending on your needs, there are several ways to protect yourself from such a situation. For example, you can apply the noindex meta tag or the “X-Robots-Tag” HTTP header. It’s also possible to protect personal data with a password as web crawlers aren’t able to crack it. In the case of the robots.txt file, it’s not necessary to delete the data from the search engine index, it’s enough to simply hide it.Robots.txt Generators - How to Create the File?

The Internet abounds in robots.txt generators and very often CMSes are equipped with special mechanisms that make it easier for users to create such files. The chances that you'll have to prepare instructions manually are rather small. However, it's worth learning the basic structures of the protocol, namely rules, and commands that can be given to web crawlers.Structures

Start by creating the robots.txt file. According to Google's recommendations, you should apply the ASCII or UTF-8 character encoding systems. Keep everything as simple as possible. Use a few keywords ending with a colon to give commands and to create access rules. User-agent: - specifies the recipient of the command. Here you need to enter the name of the web crawler. It’s possible to find an extensive list of all names online (http://www.robotstxt.org/db.html), however, in most cases, you’ll probably wish to communicate mainly with Googlebot. Nevertheless, if you want to give commands to all robots, just use the asterisk "*". So an exemplary first line of the command for Google robots looks like this: User-agent: Googlebot Disallow: - here you provide the URL that shouldn't be scanned by the bots. The most common methods include hiding the content of entire directories by inserting an access path that ends with the "/" symbol, for example: Disallow: /blocked / or files: Disallow: /folder/blockedfile.html Allow: - If any of your hidden directories contains content that you'd like to make available to web crawlers, enter its file path after “Allow”: Allow: /blocked/unblockeddirectory/ Allow: /blocked/other/unblockedfile.html Sitemap: - it allows you to define the path to the sitemap. However, this element isn't obligatory for the robots.txt file to work properly. For example: Sitemap: http://www.mywonderfuladdress.com/sitemap.xmlDirectives For the Robots.txt Files

The Default Setting

First of all, remember that web crawlers assume that they're allowed to scan the entire site. So, if your robots.txt file is supposed to look like this: User-agent: * Allow: / then you don't need to include it in the site directory. Bots will crawl the website according to their own preferences. However, you can always insert the file to prevent possible errors during website analysis.Size Of Letters

Even though it might be surprising, robots are capable of recognizing small and capital letters. Therefore, they'll perceive file.php and File.php as two different addresses.The Power Of the Asterisk

The asterisk - * mentioned earlier is another very useful feature. In Robots Exclusion Protocol it informs that it’s permitted to place any sequence of characters of unlimited length (also zero) in a given space. For example: Disallow: /*/file.html will apply to both the file in: /directory1/file.html and the one in the folder: /folder1/folder2/folder36/file.html The asterisk can also serve other purposes. If you place it before a particular file extension, then, the rule is applicable to all files of this type. For example: Disallow: /*.php will apply to all .php files on your site (except the "/" path, even if it leads to a file with the .php extension), and the rule: Disallow: /folder1/test* will apply to all files and folders in folder1 beginning with the word “test”.End of the Sequence of Characters

Don't forget about the "$" operator which indicates the end of the address. This way, using the rule: User-agent: * Disallow: /folder1/ Allow: /folder1/*.php$ suggests the bots not to index the content in folder1 but at the same time allows them to scan the .php files inside the folder. Paths containing uploaded parameters like: http://mywebsite.com/catalogue1/page.php?page=1 aren’t crawled by bots. However, such issues can be easily solved with canonical URLs.Comments

If the created file or your website is complex, it’s advisable to add comments explaining your decisions. It’s a piece of cake - just insert a "#" at the beginning of the line and web crawlers will simply skip this part of the content during scanning the site.A Few More Examples

You already know the rule that unlocks access to all files, however, it’s also worth learning the one that makes web crawlers leave your site. User-agent: * Disallow: / If your website isn't displayed in the search results, check whether the robots.txt file doesn't contain the abovementioned command. In the screenshot below you can see a robots.txt file example found on an online store website: It contains a set of all the structures mentioned above except for the sitemap which isn't mandatory.

The rules are addressed to all bots. For example, the "environment" directory is blocked but web crawlers are allowed to enter the "/environment/cache/images/" path. Moreover, the search engine isn’t able to access the shopping cart, login page, copies of the content (index, full), or the internal search engine and comments section.

It contains a set of all the structures mentioned above except for the sitemap which isn't mandatory.

The rules are addressed to all bots. For example, the "environment" directory is blocked but web crawlers are allowed to enter the "/environment/cache/images/" path. Moreover, the search engine isn’t able to access the shopping cart, login page, copies of the content (index, full), or the internal search engine and comments section.

Where to Place the Robots.txt File?

If you’ve already created a robots.txt file compliant with all standards, all you need to do is to upload it to the server. It has to be placed in your main host directory. Any other location will prevent bots from finding it. So, an exemplary URL looks like this: http://mywebsite.com/robots.txt If your website has a few versions of URL (such as the ones with http, https, www, or without www), it’s advisable to apply appropriate redirects to one, main domain. Thanks to it, the site will be crawled properly.Information for Google

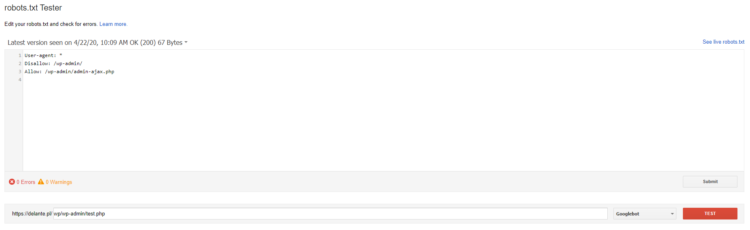

A correctly located file is easily recognized by the search engine robots. However, you can also facilitate their task. Google allows Search Console users to examine their current files, check whether any planned modifications work properly, and upload new robots.txt files. Links in the official Google documentation redirect to the old version of GSC, therefore we’re going to use it as well. [caption id="attachment_24866" align="aligncenter" width="750"] Source: https://www.google.com/webmasters/tools/robots-testing-tool[/caption]

With the use of this tool, you can efficiently check whether particular elements on your website are visible to the robots. For example, they won't be able to access the /wp/wp-admin/test.php address due to the imposed restrictions marked in red.

You can inform Google that your robots.txt file has been updated by using the "submit" option and ask it to re-crawl your site.

Source: https://www.google.com/webmasters/tools/robots-testing-tool[/caption]

With the use of this tool, you can efficiently check whether particular elements on your website are visible to the robots. For example, they won't be able to access the /wp/wp-admin/test.php address due to the imposed restrictions marked in red.

You can inform Google that your robots.txt file has been updated by using the "submit" option and ask it to re-crawl your site.

![How to Create an Ad That Will STAND Out? + [VIDEO]](jpg/how-to-create-ads-that-stand-out.jpg)

Robots.txt looks terrific at first sight, but it is a vital element of SEO. You have explained it very nicely so anyone can get understand it easily. The syntax or rules of the robots.txt file are defined easily.